A machine learning system helps to carry out an end-to-end flow of the data. Pipelines manage the automation and flow of data along with the Machine Learning Models. Pipelines are modular data flow management tools that help us build and manage production-grade autonomous Machine Learning Algorithms.

In Machine Learning Pipelines, each of the steps is iterative and cyclical. The main objective of a machine learning pipeline is to build a high-performance scalable, fault-tolerant algorithm. The entire process should be developed optimizing latency and modes of data ingress ( online[real time] or offline[batch-processing] ).

The first step that we follow is we define the business problem and its requirements. That is to say, the mode of data ingress, the type of dataset, the preparatory steps involved, and the evaluation metrics to analyze performance.

Data Ingestion

The local endpoints and the source of data is to be programmed to the algorithm, along with the type of ingestion – Online or Offline.

Data Preparation

This is the step where we process the data and extract features along with the selection of attributes.

Data Segregation

This is the stage where we layout the map for splitting the data for training, testing and validation purpose on new data as compared to previous cycles of the algorithm, in order to analyze and optimize performance through hyperparameters

Model Training

In this step, because of the modular nature of the pipeline, we are able to plug and fit multiple models and test them out on the training data.

Candidate Model Evaluation

Here we aim at assessing the performance of the model using test and validation subsets of data. It is an iterative process and various algorithms are to be tested until we get a Model that sufficiently fulfills the demand.

Model Deployment

After the model is chosen based on the most accurate performance, it is generally exposed through APIs and is then integrated alongside decision-making frameworks as a part of an end-to-end analytics solution.

Performance Monitoring

This is the most important stage as here, the model is continuously monitored to observe how it behaves in the real world. Then the calibration and new data collection take place to incrementally improve the model and its accuracy on generalization.

Model Training:

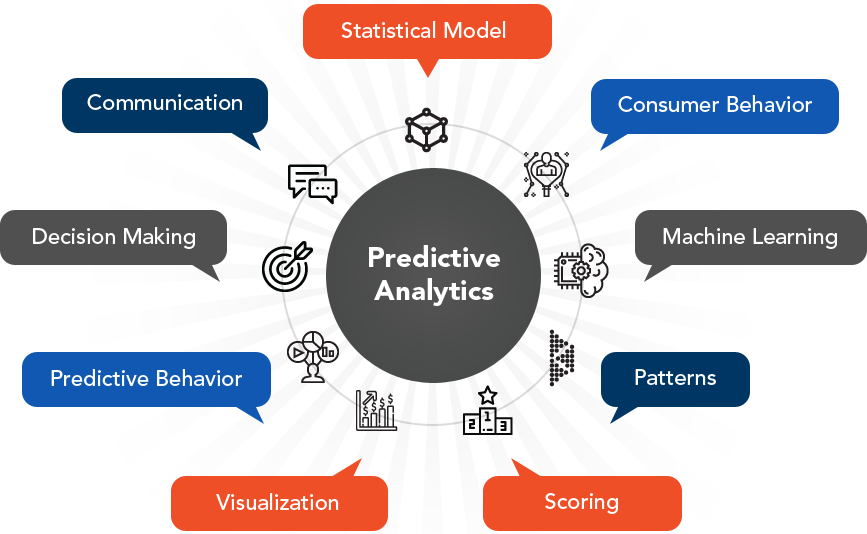

Predictive Analysis utilises the technique of predictive modeling to use computational and mathematical methods to come up with Predictive models. The predictive models examine both the current and the historical data to understand the underlying pattern in the two datasets. Thus, making forecasts, predictions or classification of future events in real time.The model updates with the arrival of new data.

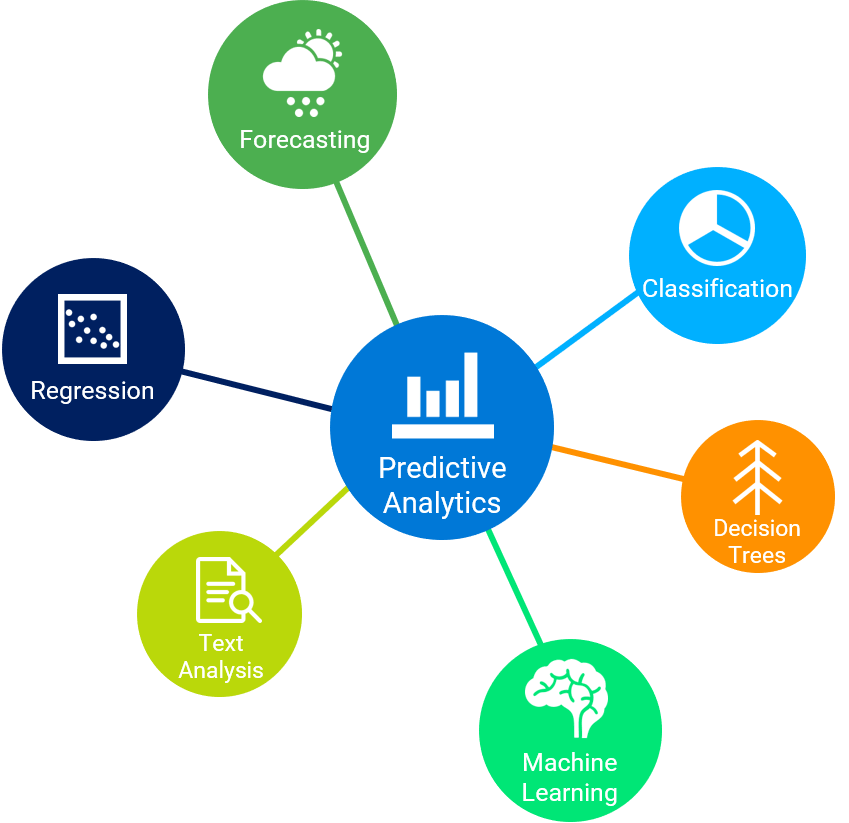

Predictive modeling is the heart of Predictive Analysis. Queppelin makes every effort to create the right predictive model with the right data and variables. Some common types of models:

- Forecast Models

It is one of the most widely used Predictive Analysis Models. It is extensively used for metric value prediction and for estimating numeric value for new data. The greatest advantage of using such models is that they can take multiple inputs. - Classification Models

It is one of the simplest predictive analysis models which categorizes the future data based on what it learned from the historical data. It helps guide decisive actions and can be applied to a lot of use cases. - Time Series Models

These models work on data with time as one of the input parameters. It predicts the data for the next few weeks using the historical data of last year.

A predictive model runs one or more Machine Learning and Deep Learning algorithms on the given data through an iterative process. Some of those are:

- Prophet

The time series and the forecasting models use this algorithm which was originally developed by Facebook. It is a great algorithm for capacity planning and helps you allocate business resources.

- Random Forest

It is a popular classification algorithm. It can do both classification and regression. Queppelin utilizes it to classify huge volumes of data. Predictive analytics normally use bagging or boosting to achieve the lowest error possible. Random Forest utilizes bagging and takes multiple subsets of training of data and trains on all of them. After training them in parallel we take the average of the outcomes.

- Gradient Boosted Model

This algorithm creates an ensemble of decision trees to come up with a classification model. As compared to Random Forest it utilizes boosting. It makes several decision trees one at a time and each tree corrects the errors made by the previous tree.